Original author: Grace Hill

Thanks to Brian Retford, SunYi, Jason Morton, Shumo, Feng Boyuan, Daniel, Aaron Greenblatt, Nick Matthew, Baz, Marcin, and Brent for their valuable insights, feedback, and reviews of this article.

For us crypto enthusiasts, artificial intelligence has been all the rage for a while. Interestingly, no one wants to see AI run amok. The original intention of the invention of blockchain is to prevent the dollar from getting out of control, so we may try to prevent artificial intelligence from getting out of control. Additionally, we now have a new technology called zero-knowledge proofs for making sure things can’t go wrong. However, to harness the beast that is AI, we must understand how it works.

A brief introduction to machine learning

Artificial intelligence has gone through several name changes, from expert systems to neural networks, then to graphical models and finally to machine learning. All of these are subsets of “artificial intelligence” that are given different names and that our understanding of AI continues to grow. Let’s dig a little deeper into machine learning and demystify it.

Note: Today, most machine learning models are neural networks due to their superior performance in many tasks. We mainly refer to machine learning as neural network machine learning.

How does machine learning work?

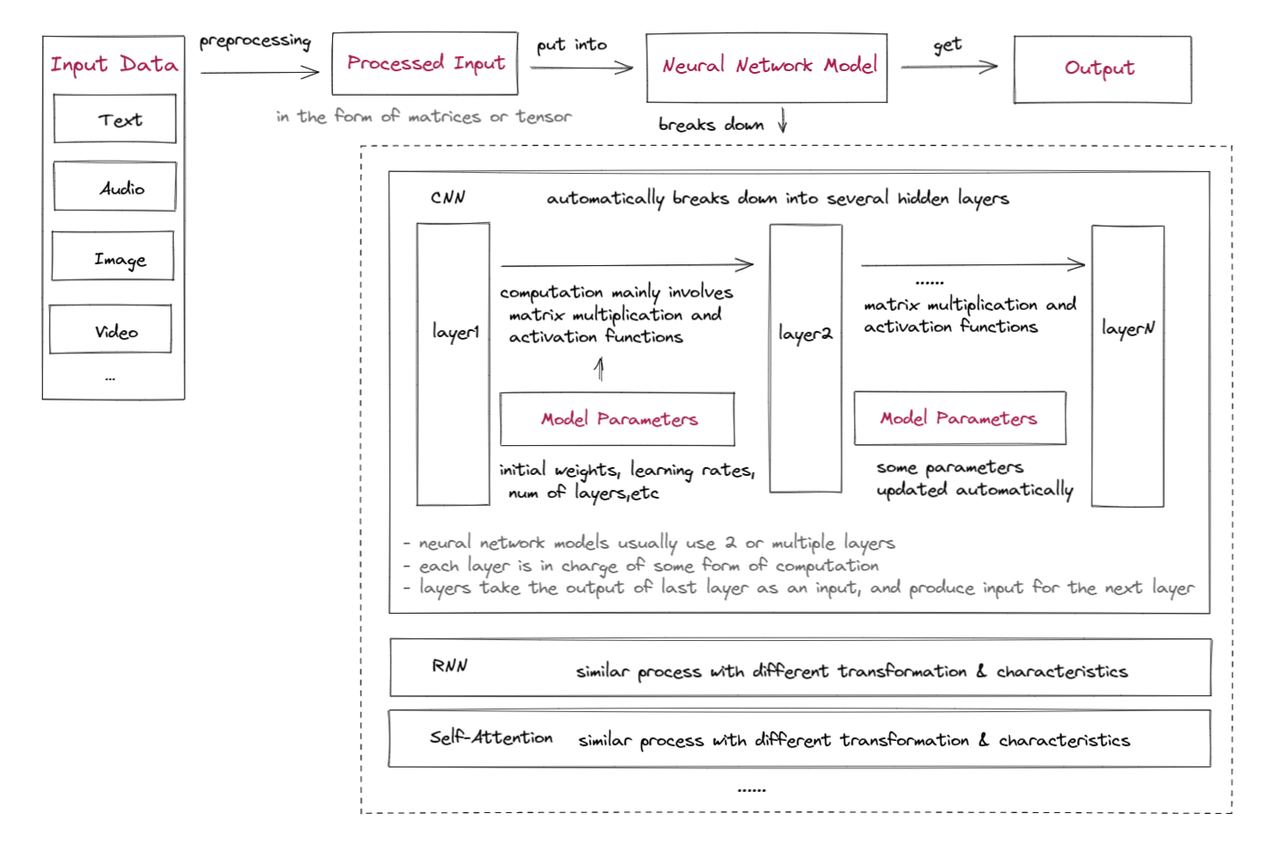

First, let’s take a quick look at the inner workings of machine learning:

First, let’s take a quick look at the inner workings of machine learning:

Input data preprocessing:

Input data needs to be processed into a format that can be used as input to the model. This usually involves preprocessing and feature engineering to extract useful information and convert the data into a suitable form, such as input matrices or tensors (high-dimensional matrices). This is the expert system approach. With the advent of deep learning, the processing layer automatically handles preprocessing.

Set initial model parameters:

Initial model parameters include multiple layers, activation functions, initial weights, biases, learning rates, etc. Some parameters can be adjusted by optimization algorithms during training to improve model accuracy.

Training data:

Input data is fed into a neural network, typically starting with one or more feature extraction and relationship modeling layers, such as convolutional layers (CNN), recurrent layers (RNN), or self-attention layers. These layers learn to extract relevant features from the input data and model the relationships between these features.

The outputs of these layers are then passed to one or more additional layers, which perform different calculations and transformations on the input data. These layers usually primarily involve matrix multiplication of learnable weight matrices and the application of nonlinear activation functions, but may also include other operations such as convolution and pooling in convolutional neural networks, or iteration in recurrent neural networks. The output of these layers serves as input to the next layer in the model, or as the final predicted output.

Get the models output:

The output of a neural network calculation is usually a vector or matrix that represents the probability of image classification, a sentiment analysis score, or other results, depending on the application of the network. There is usually also an error evaluation and parameter update module that automatically updates parameters according to the purpose of the model.

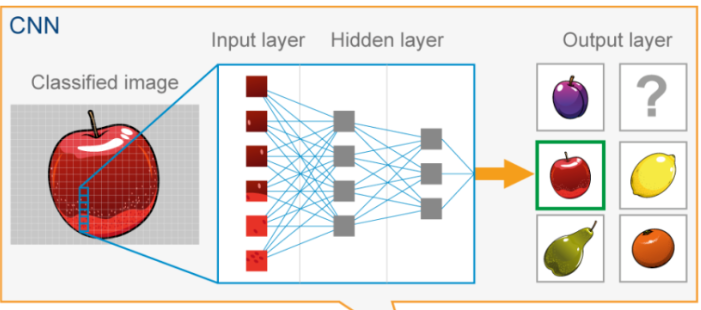

If the above explanation seems too obscure, you can refer to the following example of using a CNN model to identify apple pictures.

Load the image into the model as a matrix of pixel values. This matrix can be represented as a 3D tensor with dimensions (height, width, channels).

Set the initial parameters of the CNN model.

The input image is passed through multiple hidden layers in a CNN, with each layer applying convolutional filters to extract increasingly complex features from the image. The output of each layer is passed through a nonlinear activation function and then pooled to reduce the dimensionality of the feature map. The last layer is usually a fully connected layer that generates output predictions based on the extracted features.

The final output of the CNN is the class with the highest probability. This is the predicted label of the input image.

A trust framework for machine learning

We can summarize the above into a machine learning trust framework, including four basic layers of machine learning. The entire machine learning process needs these layers to be trustworthy in order to be reliable:

Input: Raw data needs to be preprocessed and sometimes kept secret.

Integrity: Input data has not been tampered with, not contaminated by malicious input, and has been preprocessed correctly.

Privacy: Input data will not be disclosed if necessary.

Output: Needs to be accurately generated and transmitted

Completeness: The output is generated correctly.

Privacy: Output will not be leaked if required.

Model Type/Algorithm: The model should be calculated correctly

Completeness: The model performs correctly.

Privacy: The model itself or the calculations will not be leaked if necessary.

Different neural network models have different algorithms and layers suitable for different use cases and inputs.

Convolutional neural networks (CNNs) are commonly used for tasks involving grid-like data, such as images, where local patterns and features can be captured by applying convolution operations to small input regions.

Recurrent neural networks (RNN), on the other hand, are well suited for sequential data, such as time series or natural language, where hidden states can capture information from previous time steps and model temporal dependencies.

Self-attention layers are useful for capturing relationships between elements in an input sequence, making them effective for tasks such as machine translation or summarization where long-range dependencies are crucial.

Other types of models also exist, including multilayer perceptrons (MLPs) and others.

Model parameters: In some cases, parameters should be transparent or democratically generated, but in all cases not susceptible to tampering.

Integrity: Parameters are generated, maintained and managed in the correct way.

Privacy: Model owners often keep machine learning model parameters secret to protect the intellectual property and competitive advantage of the organization that developed the model. This phenomenon was only common until transformer models became insanely expensive to train, but is still a major problem for the industry.

The trust issue in machine learning

With the explosive growth of machine learning (ML) applications (CAGR of over 20%) and their increasing integration into daily life, such as the recent popularity of ChatGPT, the issue of trust in ML is becoming increasingly critical ,Can not be ignored. Therefore, it is critical to identify and address these trust issues to ensure responsible use of AI and prevent its potential misuse. However, what are the problems? Let’s take a closer look.

Insufficient transparency or provability

Trust issues have long plagued machine learning for two main reasons:

Privacy Nature: As mentioned above, model parameters are often private, and in some cases model inputs need to be kept private as well, which naturally creates some trust issues between model owners and model users.

Algorithmic black boxes: Machine learning models are sometimes called “black boxes” because they involve many automated steps in their calculations that are difficult to understand or explain. These steps involve complex algorithms and large amounts of data, resulting in uncertain and sometimes random outputs, making the algorithms vulnerable to accusations of bias or even discrimination.

Before going any further, one of the larger assumptions of this article is that the model is ready to use, meaning that it is well trained and fit for purpose. Models may not be suitable for every situation, and models improve at an alarming rate. The normal lifespan of a machine learning model is between 2 and 18 months, depending on the application scenario.

A detailed breakdown of trust issues in machine learning

There are some trust issues in the model training process, and Gensyn is currently working on generating valid proofs to facilitate this process. However, this article mainly focuses on the model inference process. Let’s now use four building blocks of machine learning to uncover potential trust issues:

enter:

Data sources are tamper-proof

Private input data is not stolen by model operators (privacy issue)

Model:

The model itself is as accurate as advertised

The calculation process is completed correctly

parameter:

Model parameters have not been changed or are as advertised

During the process, model parameters that have value to the model owner are not leaked (privacy issue)

Output:

The output is provably correct (may improve as all elements above are improved)

How to apply ZK to the machine learning trust framework?

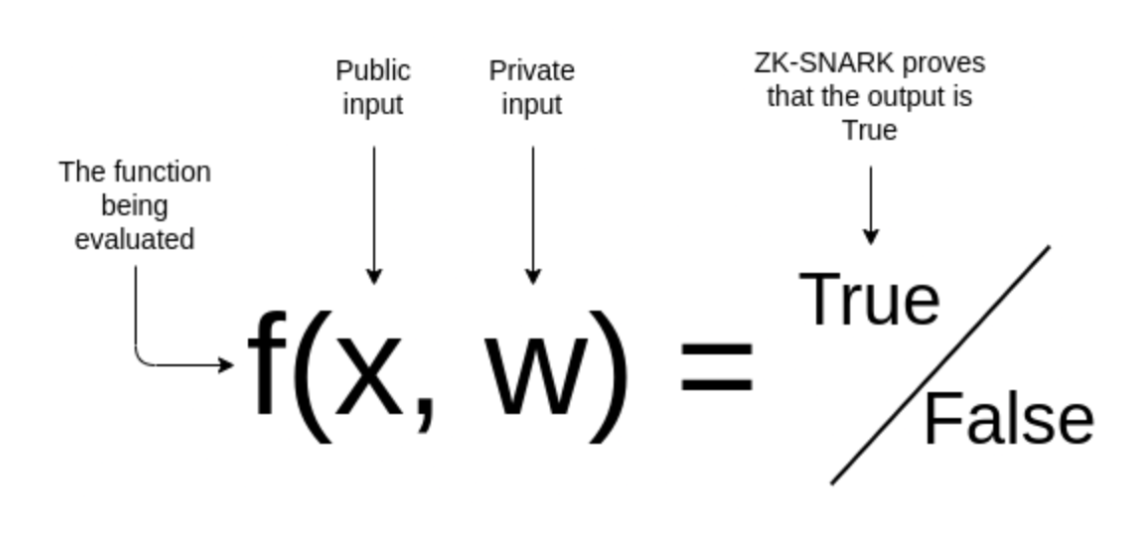

Some of the above trust issues can be solved by going on-chain; uploading inputs and machine learning parameters to the chain, and calculating models on the chain, can ensure the correctness of inputs, parameters, and model calculations. But this approach may sacrifice scalability and privacy. Giza is doing this work on Starknet, but due to cost issues it only supports simple machine learning models like regression, not neural networks. ZK technology can solve the above trust problems more effectively. Currently, ZK in ZKML usually refers to zkSNARK. First, let’s quickly review some basic concepts of zkSNARK:

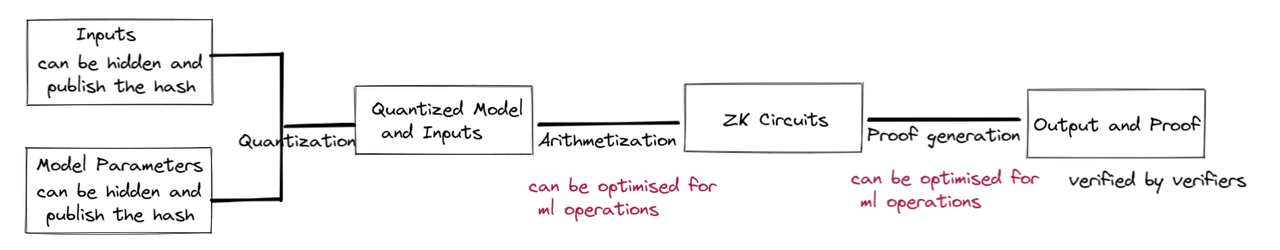

A zkSNARK proof is proof that I know some secret input w such that it is true that the result of this calculation f is OUT, without telling you what w is. The proof generation process can be summarized into the following steps:

Formulate the statement to be proved: f(x, w)=true

I correctly classified this image x using a machine learning model f with private parameters w.

Convert statements into circuits (arithmetization): Different circuit building methods include R 1 CS, QAP, Plonkish, etc.

Compared to other use cases, ZKML requires an extra step called quantization. Neural network inference is typically done using floating point arithmetic, which is very expensive to emulate in the main domain of arithmetic circuits. Different quantification methods provide a compromise between accuracy and equipment requirements.

Some circuit construction methods like R 1 CS are not efficient for neural networks. This part can be adjusted to improve performance.

Generate a proof key and a verification key

Create a witness: when w=w*, f(x, w)=true

Create a hash commitment: The witness w* commits to generate a hash value using a cryptographic hash function. This hash can be made public.

This helps ensure that private inputs or model parameters have not been tampered with or modified during calculations. This step is crucial because even minor modifications can have a significant impact on the models behavior and output.

Proof generation: Different proof systems use different proof generation algorithms.

Special zero-knowledge rules need to be designed for machine learning operations, such as matrix multiplication and convolutional layers, in order to implement sublinear time-efficient protocols for these computations.

- Generic zkSNARK systems like groth 16 may not be able to handle neural networks efficiently due to the excessive computational load.

- Since 2020, many new ZK proof systems have emerged to optimize ZK proof for the model inference process, including vCNN, ZEN, ZKCNN, and pvCNN. However, most of them are optimized for CNN models. They can only be applied to some major datasets, such as MNIST or CIFAR-10.

- In 2022, Daniel Kang Tatsunori Hashimoto, Ion Stoica and Yi Sun (founders of Axiom) proposed a new proof scheme based on Halo 2, achieving ZK proof generation for the ImageNet data set for the first time. Their optimization focuses on the arithmetic part, with novel lookup parameters for nonlinearity and reusing subcircuits across layers.

- Modulus Labs is benchmarking different proof systems for on-chain inference and found that in terms of proof time, ZKCNN and plonky 2 perform best; in terms of peak prover memory usage, ZKCNN and halo 2 perform well; while plonky performs well though , but at the expense of memory consumption, and ZKCNN is only suitable for CNN models. It is also developing a new zkSNARK system designed specifically for ZKML, as well as a new virtual machine.

Verification Proof: The verifier uses the verification key to verify without knowledge of the witness.

Therefore, we can show that applying zero-knowledge techniques to machine learning models can solve many trust problems. Similar techniques using interactive verification can achieve similar results, but will require more resources on the verifier side and may face more privacy issues. It’s worth noting that depending on the specific model, generating proofs for them can take time and resources, so there will be trade-offs when ultimately applying this technology to real-world use cases.

Current status of current solutions

Next, what are the existing solutions? Note that a model provider may have many reasons for not wanting to generate ZKML proofs. For those who are brave enough to try ZKML and the solution makes sense, they can choose from a few different solutions depending on the model and where the inputs are:

If the input data is on-chain, consider using Axiom as a solution:

Axiom is building a zero-knowledge coprocessor for Ethereum to improve user access to blockchain data and provide a more complex digital view of on-chain data. Reliable machine learning computations on on-chain data are possible:

- First, Axiom imports on-chain data by storing the Merkle root of the Ethereum block hash in its smart contract AxiomV 0, which is trustlessly verified through the ZK-SNARK verification process. The AxiomV 0 StoragePf contract then allows batch verification of arbitrary historical Ethereum storage proofs against a root of trust given by cached block hashes in AxiomV 0.

- Next, machine learning input data can be extracted from the imported historical data.

- Axiom can then apply proven machine learning operations on top; using optimized halo 2 as a backend to validate the validity of each computational part.

- Finally, Axiom will attach the zk proof of each query result, and the Axiom smart contract will verify the zk proof. Any interested party who wants to prove it can access it from the smart contract.

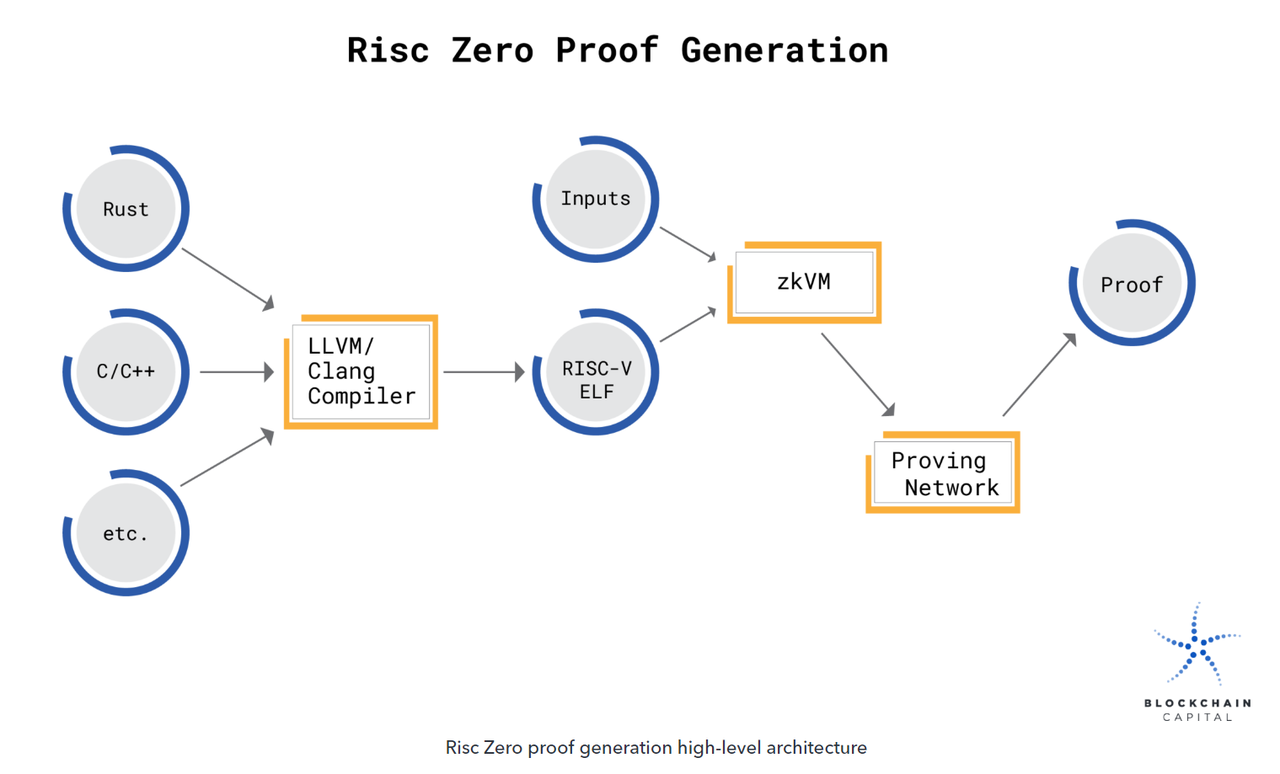

If you put your model on-chain, consider using RISCZero as a solution:

By running a machine learning model in RISC Zeros ZKVM, you can prove that the exact calculations involved in the model were performed correctly. The calculation and verification process can be done offline in the users preferred environment, or in Bonsai Network, a universal roll-up.

- First, the source code of the model needs to be compiled into a RISC-V binary. When this binary is executed in ZKVM, the output is paired with a computed receipt containing a cryptographic seal. This seal serves as a zero-knowledge argument for computational integrity, associating an encrypted imageID (identifying the executing RISC-V binary) with the declared code output for quick verification by third parties.

- When a model is executed in ZKVM, calculations about state changes are done entirely inside the VM. It does not leak any information about the internal state of the model to the outside world.

- Once the model has been executed, the resulting seal becomes a zero-knowledge proof of computational integrity. RISC ZeroZKVM is a RISC-V virtual machine that can generate zero-knowledge proofs of the code it executes. Using ZKVM, it is possible to generate an encrypted receipt that anyone can verify was generated by ZKVM client code. When a receipt is issued, no other information about the code execution (such as the input provided) is revealed.

The specific process of generating ZK proofs involves an interactive protocol with a random oracle as the verifier. The seal on the RISC Zero receipt is essentially a record of this interaction agreement.

If you want to import models directly from commonly used machine learning software such as Tensorflow or Pytorch, consider using ezkl as a solution:

Ezkl is a library and command line tool for inference of deep learning models and other computational graphs in zkSNARK.

- First, export the final model as a .onnx file and some sample inputs as a .json file.

- Then, point ezkl to .onnx and .json files to generate ZK-SNARK circuits that can prove ZKML statements.

Seems simple, right? The goal of Ezkl is to provide an abstraction layer that allows high-level operations to be called and laid out in Halo 2 circuits. Ezkl abstracts away a lot of complexity while maintaining incredible flexibility. Their quantized model has an automatically quantized scaling factor. They support flexibility in changing to other proof systems involved in new solutions. They also support multiple types of virtual machines, including EVM and WASM.

Regarding the proof system, ezkl customizes halo 2 circuits by aggregating proofs (converting hard-to-verify proofs into easy-to-verify proofs through intermediaries) and recursion (can solve memory problems, but is difficult to adapt to halo 2). Ezkl also optimizes the entire process through fusion and abstraction that can reduce overhead through advanced proofs.

It is worth noting that compared with other general zkml projects, Accessor Labs focuses on providing specially designed zkml tools for fully on-chain games, which may involve AI NPCs, automatic updates of gameplay, game interfaces involving natural language, etc.

Where are the use cases?

Solving the trust issue with machine learning through ZK technology means it can now be applied to more high risk and high certainty use cases than just keeping up with peoples conversations or matching pictures of cats with pictures of dogs differentiate. Web3 is already exploring many of these use cases. This is no coincidence, as most Web3 applications run or are intended to run on blockchains, as blockchains have specific properties to operate securely, be difficult to tamper with, and have deterministic computations. A verifiably well-behaved AI should be one that can operate in a trustless and decentralized environment, right?

Use cases where ZK+ML can be applied in Web3

Many Web3 applications sacrifice user experience for security and decentralization because that is clearly their priority, and infrastructure limitations exist. AI/ML has the potential to enrich user experiences, which is undoubtedly helpful but previously seemed impossible without compromise. Now, thanks to ZK, we can comfortably see the integration of AI/ML with Web3 applications without sacrificing too much in terms of security and decentralization.

Essentially, this will be a Web3 application (which may or may not exist at the time of writing) that implements ML/AI in a trustless manner. By trustless, we mean whether it runs on a trustless environment/platform, or whether its operation can be proven verifiable. Note that not all ML/AI use cases (even in Web3) require or prefer to run in a trustless manner. We will analyze each part of ML capabilities used in various Web3 domains. We will then identify the parts that require ZKML, typically high-value parts where people are willing to pay extra for proof. Most of the use cases/applications mentioned below are still in the experimental research stage. Therefore, they are still far from practical adoption. Well discuss why later.

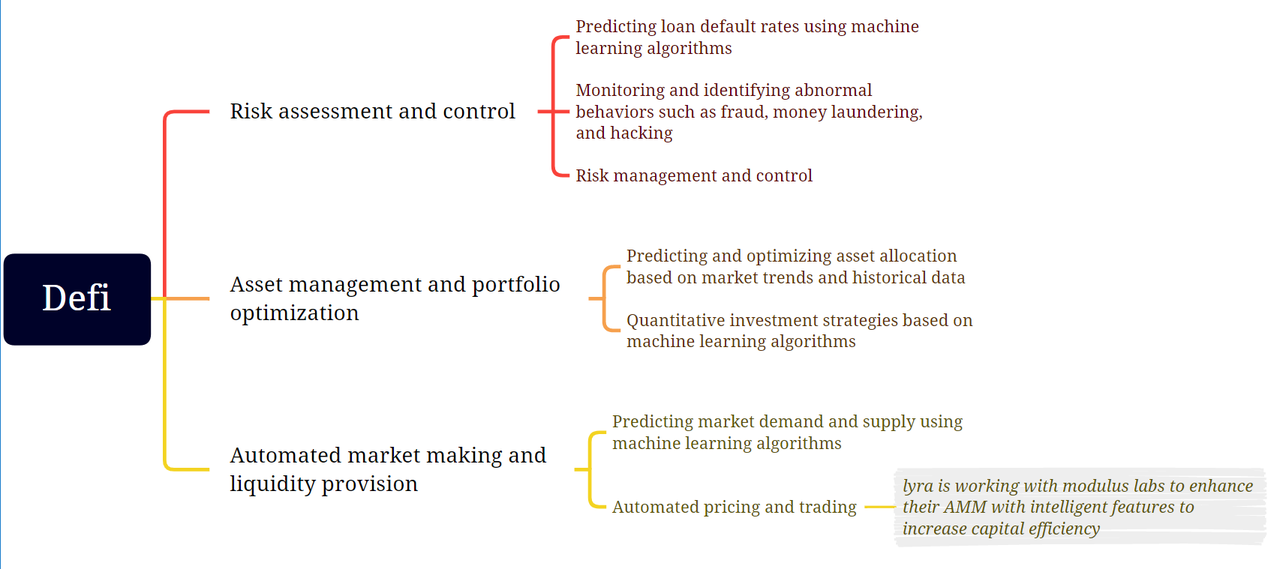

Defi

Defi is one of the few proofs of product-market fit among blockchain protocols and Web3 applications. The ability to create, store and manage wealth and capital in a permissionless manner is unprecedented in human history. We have identified a number of use cases that require AI/ML models to run permissionless to ensure security and decentralization.

Risk assessment: Modern finance requires AI/ML models for various risk assessments, from preventing fraud and money laundering to issuing unsecured loans. Ensuring that such AI/ML models operate in a verifiable manner means we can prevent them from being manipulated to enable censorship, thereby hindering the use of the permissionless nature of Defi products.

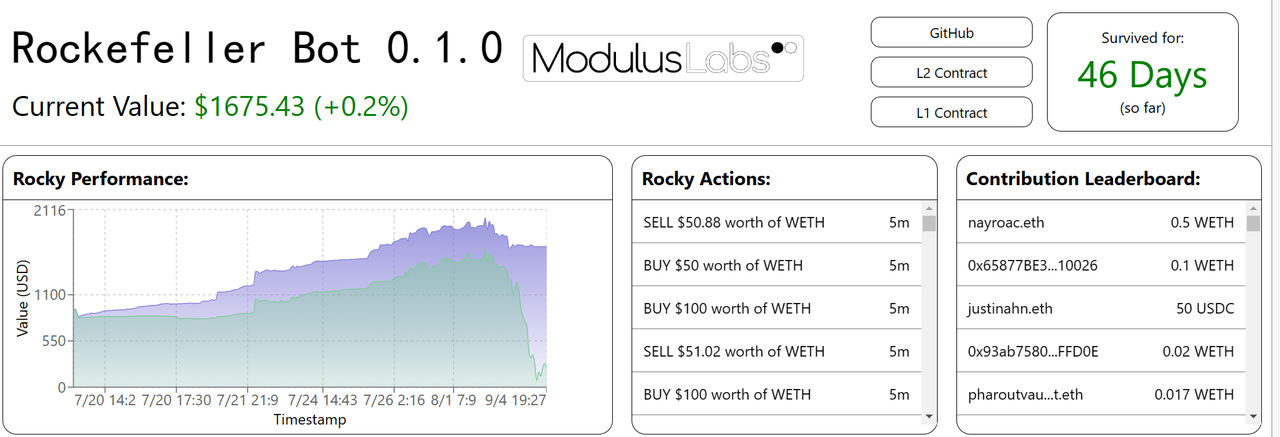

Asset Management: Automated trading strategies are not new to traditional finance and Defi. There have been attempts to apply AI/ML generated trading strategies, but only a few decentralized strategies have been successful. Typical applications in the Defi field currently include the Rocky Bot experimented by Modulus Labs.

- Rocky Bot: Modulus Labs created a trading bot using AI for decision-making on StarkNet.

-- A contract that holds funds on L1 and exchanges WEth/USDC on Uniswap.

This applies to the output part of the ML trust framework. Output is generated on L2, transferred to L1, and used for execution. It cannot be tampered with during this process.

-- An L2 contract implementing a simple (but flexible) three-layer neural network for predicting future WEth prices. The contract uses historical WETH price information as input.

This applies to both the Input and Model sections. Historical price information input comes from the blockchain. The execution of the model is computed in CairoVM (a type of ZKVM), and its execution trace will generate a ZK proof for verification.

-- A simple front-end for visualization and PyTorch code for training regressors and classifiers.

Automated Market Makers and Liquidity Provision: Essentially, this is a combination of similar efforts going on in risk assessment and asset management, just in a different way in terms of volumes, timelines, and asset types. There are many research papers on how to use ML for market making in the stock market. It may only be a matter of time before some research results are adapted to Defi products.

- For example, LyraFinance is working with Modulus Labs to enhance its AMM with smart features to make its capital utilization more efficient.

Honorable mentions:

- The Warp.cc team has developed a tutorial project on how to deploy a smart contract that runs a trained neural network to predict Bitcoin prices. This aligns with the input and model parts of our framework, as the input uses data provided by RedStoneOracles and the model is executed on Arweave as a Warp smart contract.

- This is the first iteration and involves ZK, so it belongs among our honorable mentions, but in the future, the Warp team is considering implementing a ZK part.

game

Games and machine learning have many intersections:

The gray areas in the figure represent our initial assessment of whether the machine learning capabilities in the game portion need to be paired with corresponding ZKML proofs. Leela Chess Zero is a very interesting example of applying ZKML to a game:

AI agent

- Leela Chess Zero (LC 0): An entirely on-chain AI chess player built by Modulus Labs, playing against a group of human players from the community.

-- LC 0 and the human collective take turns playing the game (as it should be in chess).

--The movement of LC 0 is calculated from a simplified circuit-fit model of LC 0 .

- LC 0 moves have a Halo 2 snark proof to ensure there is no interference from the human brain trust. Only the simplified LC 0 model is there to make decisions.

- This is in line with the Model section. The execution of the model has a ZK proof to verify that the calculations have not been tampered with.

Data analysis and prediction: This has been a common use of AI/ML in the Web2 gaming world. However, we found very few reasons to implement ZK in this ML process. Its probably not worth the effort to not get too much value directly involved in the process. However, if certain analysis and predictions are used to determine rewards for users, then ZK may be implemented to ensure that the results are correct.

Honorable mentions:

- AI Arena is an Ethereum-native game where players from all over the world can design, train and battle NFT characters powered by artificial neural networks. Talented researchers from around the world compete to create the best machine learning (ML) models to take part in gaming battles. AI Arena focuses on feedforward neural networks. Overall, they have lower computational overhead than convolutional neural networks (CNNs) or recurrent neural networks (RNNs). Still, currently models are only uploaded to the platform after training is complete, so its worth mentioning.

- GiroGiro.AI is building an AI toolkit that enables the masses to create artificial intelligence for personal or commercial use. Users can create various types of AI systems based on the intuitive and automated AI workflow platform. By inputting only a small amount of data and selecting an algorithm (or model for improvement), users can generate and utilize the AI model they have in mind. Although the project is in the very early stages, we are very excited to see what GiroGiro can bring to game finance and metaverse-focused products, so we included it as an honorable mention.

DID and social

In the DID and social space, the intersection of Web3 and ML is currently mainly in the areas of human proof and credential proof; other parts may develop, but will take longer.

human proof

- Worldcoin uses a device called the Orb to determine if someone is a real person, rather than trying to fraudulently verify. It does this by analyzing facial and iris features through various camera sensors and machine learning models. Once this determination is made, Orb takes photos of a group of peoples irises and uses multiple machine learning models and other computer vision techniques to create an iris code, a digital representation of the most important features of an individuals iris pattern. The specific registration steps are as follows:

-- The user generates a Semaphore key pair on the mobile phone and provides the hashed public key to Orb through the QR code.

-- Orb scans the users iris and calculates the users IrisHash locally. It then sends a signed message containing the hashed public key and IrisHash to the registration order node.

-- The sequential node verifies the Orbs signature and then checks if the IrisHash matches one already in the database. If the uniqueness check passes, the IrisHash and public key will be saved.

- Worldcoin uses the open source Semaphore zero-knowledge proof system to convert the uniqueness of IrisHash into the uniqueness of a user account without linking them. This ensures that a newly registered user can claim his/her WorldCoins successfully. Proceed as follows:

-- The users application generates a wallet address locally.

-- The application uses a Semaphore to prove that it possesses the private key of a previously registered public key. Because this is a zero-knowledge proof, it does not reveal which public key it is.

-- The proof is again sent to the sequencer, which verifies the proof and initiates the process of depositing the tokens to the provided wallet address. The so-called parts are sent with proof, ensuring that users cannot claim their rewards twice.

- WorldCoin uses ZK technology to ensure that the output of its ML models does not reveal users’ personal data because there is no correlation between them. In this case, it falls under the output part of our trust framework, as it ensures that the output is transmitted and used in the desired manner, in this case privately.

Proven by action

- Astraly is a reputation-based token issuance platform on StarkNet, used to find and support the latest and greatest StarkNet projects. Measuring reputation is a challenging task because it is an abstract concept that cannot be easily quantified with simple metrics. When dealing with complex indicators, more comprehensive and diverse inputs tend to produce better results. Thats why Astraly turned to Modulus Labs for help using ML models to provide more accurate reputation ratings.

Personalized recommendations and content filtering

- Twitter recently became open source"For you"(For You) Timeline’s algorithm, but users cannot verify that the algorithm is working correctly because the weights of the ML model used to rank tweets are kept secret. This raises concerns about bias and censorship.

- However, Daniel Kang, Edward Gan, Ion Stoica, and Yi Sun used ezkl to provide a solution that helps balance privacy and transparency by proving the true operation of the Twitter algorithm without revealing the model weights. By using the ZKML framework, Twitter can commit to a specific version of its ranking model and publish proof that it produces a specific final output ranking for a given user and tweet. This solution enables users to verify that calculations are correct without trusting the system. While there is still a lot of work to be done to make ZKML more usable, this is a positive step toward greater transparency in social media. So this falls under our ML trust framework"Model"part.

Revisiting the ML trust framework from a use case perspective

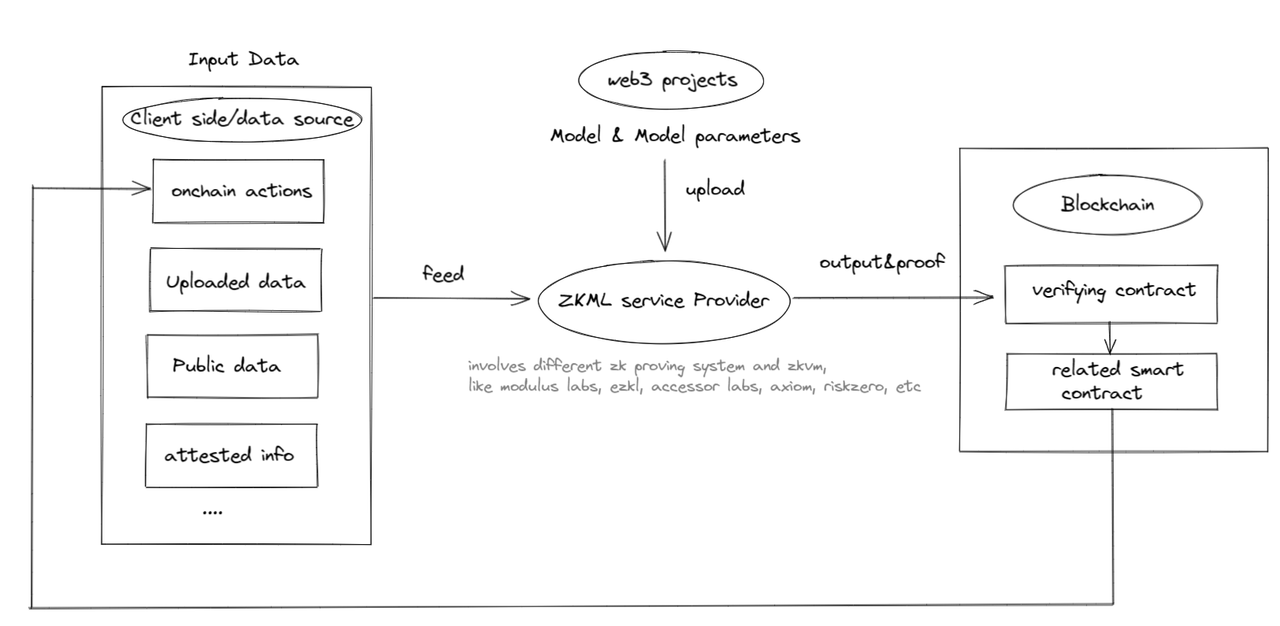

It can be seen that the potential use cases of ZKML in Web3 are still in their infancy, but they cannot be ignored; in the future, as the use of ZKML continues to expand, there may be a need for ZKML providers, forming a closed loop in the figure below:

ZKML service providers focus primarily on the model and parameter parts of the ML trust framework. Although most of what we see now that is related to parameters is more model related. It is important to note that the “input” and “output” parts are more addressed by blockchain-based solutions, either as a data source or a data destination. ZK or blockchain alone may not achieve complete trustworthiness, but combined they may.

How far is it from large-scale application?

Finally, we can focus on the current feasibility status of ZKML and how far we are from large-scale application of ZKML.

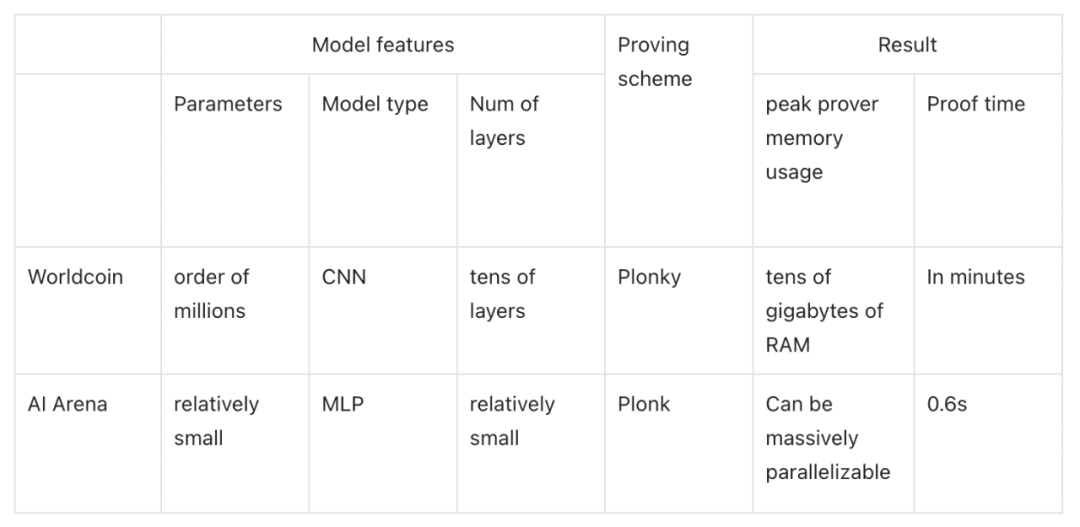

Modulus Labs’ paper provides us with some data and insights on the feasibility of ZKML applications by testing Worldcoin (with strict accuracy and memory requirements) and AI Arena (with cost-effectiveness and time requirements):

If Worldcon used ZKML, the memory consumption of the prover would exceed the capabilities of any commercial mobile hardware. If the AI Arena competition uses ZKML, using ZKCNNs will increase the time and cost by 100 times (0.6 s compared to the original 0.008 s). So unfortunately, neither is suitable for directly applying ZKML techniques to prove time and prover memory usage.

What about proof size and verification time? We can refer to the papers of Daniel Kang, Tatsunori Hashimoto, Ion Stoica and Yi Sun. As shown below, their DNN inference solution can achieve an accuracy of 79% on ImageNet (model type: DCNN, 16 layers, 3.4 million parameters), while the verification time only takes 10 seconds and the proof size is 5952 bytes. Additionally, zkSNARKs can be scaled down to 59% accuracy with a verification time of just 0.7 seconds. These results show that zkSNARKing on ImageNet-scale models is feasible in terms of proof size and verification time.

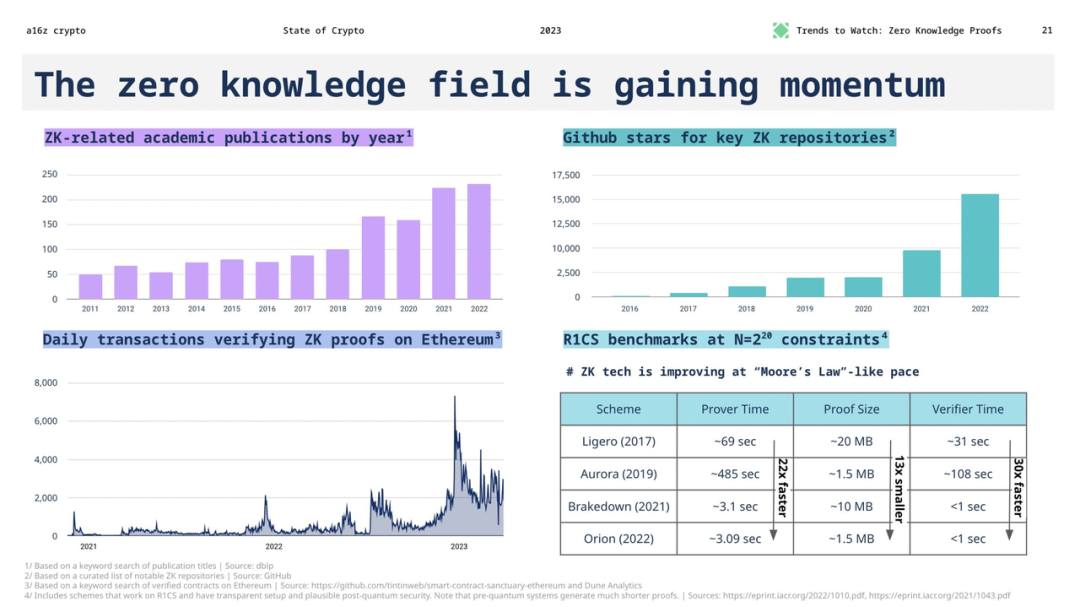

The main technical bottlenecks at present are proof time and memory consumption. It is not yet technically feasible to apply ZKML in the web3 case. Does ZKML have the potential to catch up with the development of AI? We can compare several empirical data:

The speed of development of machine learning models: The GPT-1 model released in 2019 has 150 million parameters, while the latest GPT-3 model released in 2020 has 175 billion parameters, a 1,166-fold increase in the number of parameters in just two years .

Optimization speed of zero-knowledge systems: The performance growth of zero-knowledge systems basically follows a Moores Law pace. New zero-knowledge systems emerge almost every year, and we expect the rapid growth in prover performance to continue for some time.

Judging from this data, although machine learning models are developing very quickly, the optimization speed of zero-knowledge proof systems is also steadily increasing. In the future, ZKML may still have the opportunity to gradually catch up with the development of AI, but it needs continuous technological innovation and optimization to narrow the gap. This means that although ZKML currently has technical bottlenecks in web3 applications, with the continuous development of zero-knowledge proof technology, we still have reason to expect ZKML to play a greater role in web3 scenarios in the future. Comparing the improvement rates of cutting-edge ML and ZK, the prospects are not very optimistic. However, with the continuous improvement of convolution performance, ZK hardware, and the ZK proof system tailored based on highly structured neural network operations, it is hoped that the development of ZKML can meet the needs of web3, starting with providing some old-fashioned machine learning Function starts.

While it may be difficult for us to use blockchain + ZK to verify that the information ChatGPT feeds back to me is trustworthy, we may be able to fit some smaller and older ML models into the ZK circuit.

in conclusion

"Power tends to corrupt, and absolute power corrupts absolutely". With the incredible power of AI and ML, there is currently no foolproof way to bring it under governance. Facts have proven time and again that governments either provide late intervention with the aftermath, or advance outright bans. Blockchain+ZK offers one of the few solutions that can tame the beast in a provable and verifiable way.

We look forward to seeing more product innovation in the field of ZKML. ZK and blockchain provide a safe and trustworthy environment for the operation of AI/ML. We also expect these product innovations to generate entirely new business models, because in a permissionless cryptocurrency world, we are not limited by the de-SaaS commercialization model here. We look forward to supporting more builders in this"westfall anarchy"and"Ivory Tower Elite"of fascinating overlaps to build upon their exciting ideas. Were still in the early stages, but we may already be on our way to saving the world.

This article is original by the SevenX research team and is for communication and learning purposes only and does not constitute any investment reference. If you need to cite, please indicate the source.